Basic performance levels and human error types The generic error-modelling system (GEMS)

This post is part of a series of notes I collated during my studies at UCL’s Interaction Centre (UCLIC).

Notes on the generic error-modelling system (GEMS) conceptual framework and the origins of basic human error types.

Rasmussen’s skill-rule-knowledge classification of human performance:

- Skill-based slips (and lapses).

- Rule-based mistakes.

- Knowledge-based mistakes.

Generic Error Modelling System (GEMS)

- Slips and lapses – actions deviate from current intention due to execution failures and/or storage failures.

- Mistakes – actions may run according to plan, but where the plan is inadequate to achieve its desired outcome.

The three error types may be differentiated by a variety of processing, representational and task-related factors.

1. Why the slips-mistakes dichotomy is not enoughPermalink to section titled 1. Why the slips-mistakes dichotomy is not enough

Execution failures (slips and lapses) and planning failures (mistakes) were a useful first approximation.

Level of cognitive operation

- Mistakes occur at the level of intention formation.

- Slips and lapses are associated with failures at the more subordinate levels of action selection, execution and intention storage.

Both slips and mistakes can take strong-but-wrong forms, where the erroneous behaviour is more in keeping with past practice than the current circumstances demand.

2. Distinguishing three error typesPermalink to section titled 2. Distinguishing three error types

The three basic error types may be distinguished along a variety of task, representational and processing dimensions.

| Performance level | Error type |

|---|---|

| Skill-based | Slips and lapses |

| Rule-based | RB mistakes |

| Knowledge-based | KB mistakes |

2.1. Type of activityPermalink to section titled 2.1. Type of activity

A key distinction is the question of whether or not an individual is engaged in problem solving at the time an error occurred.

Behaviour at the SB level is primarily a way of dealing with routine and non-problematic activities in familiar situations.

Both RB and KB performance are only called into play after the individual has become conscious of a problem, that is, the unanticipated occurrence of some externally or internally produced event or observation that demands the deviation from the current plan.

SB slips generally precede the detection of a problem, while RB and KB mistakes arise during subsequent attempts to find a solution.

2.2. Focus of attentionPermalink to section titled 2.2. Focus of attention

SB level – wherever else the limited attentional resource is being directed at that moment, it will not be focussed on the routine task in hand.

RB and KB levels – the limited attentional focus will not have strayed far from some feature of the problem configuration.

2.3. Control modePermalink to section titled 2.3. Control mode

Performance at both SB and RB levels is characterised by feed-forward control emanating from stored knowledge structures (motor programs, schemata, rules).

SB level performance is based on feed-forward control and depends upon a very flexible and efficient dynamic internal world model.

RB level performance is goal-orientated, but structured by feed-forward control through a stored rule. Very often, the goal is not even explicitly formulated, but is found implicitly in the situation releasing the stored rules.

Control at the KB level is primarily of the feedback kind. This proceeds by setting local goals, observing the extent to which the actions are successful and then modifying them to minimise the discrepancy between the present position and the desired state. It is in essence error driven.

Errors at the SB and RB levels occur whole behaviour is under the control of largely automatic units within the knowledge base.

KB errors happen when the individual has ‘run out’ of applicable problem-solving routines and is forced to resort to attentional processing within the conscious workspace.

2.4. Expertise and the predictability of error typesPermalink to section titled 2.4. Expertise and the predictability of error types

At the SB level, the guidance of action tends to be snatched by the most active motor schema in the ‘vicinity’ of the node at which an attentional check is omitted or mistimed.

At the RB level, the most probable error involves the inappropriate mismatch of environmental signs to the situational component of well-tried ‘troubleshooting’ rules.

At the KB level, when the problem space is largely uncharted territory, it is less easy to specify in advance the shortcuts that might be taken in error.

Mistakes at the KB level have hit-and-miss qualities not dissimilar to the errors of beginners. No matter how expert people are at coping with familiar problems, their performance will begin to approximate that of novices once their repertoire of rules has been exhausted by the demands of a novel situation.

Expertise consists of having a large stock of appropriate routines to deal with a wide variety of contingencies.

Crucial differences between experts and novices lie in both the level and the complexity of their knowledge representation and rules.

Experts have a much larger collection of problem-solving rules than novices.

The more skilled an individual is in carrying out a particular task, the more likely it is that his or her errors will take ‘strong-but-wrong’ forms at the SB and RB levels of performance.

2.5. The ratio of error to opportunityPermalink to section titled 2.5. The ratio of error to opportunity

Virtually all adult actions, even when directed by knowledge-based processing, have very substantial skill-based and rule-based components.

Skill-based and rule-based processing are the hallmarks of expertise.

It is a safe generalisation to assert that all activities are likely to involve greater amounts of SB and RB processing.

2.6. The influence of situational factorsPermalink to section titled 2.6. The influence of situational factors

Errors at each of the three levels will vary in the degree to which they are shaped by both intrinsic (cognitive biases, attentional limitations) and extrinsic factors (the structural characteristics of the task, context effects).

2.7. DetectabilityPermalink to section titled 2.7. Detectability

Mistakes are harder to detect than slips.

2.8. Relationship to changePermalink to section titled 2.8. Relationship to change

In SB slips and lapses, the error-triggering changes generally involve a necessary departure from some well-established routine.

In RB mistakes, the nature of the likely changes are, in some degree, anticipated, either as a result of past encounters or because they are considered as likely possibilities. What is lacking is adequate knowledge of when such changes will occur and what precise forms they will take.

At the KB level, mistakes result from changes in the world that have neither been prepared for nor anticipated; the problem solver has encountered a novel situation.

The three error types can therefore be distinguished according to the degree of preparedness that exists prior to change.

3. A generic error-modelling system (GEMS)Permalink to section titled 3. A generic error-modelling system (GEMS)

| Dimension | Skill-based errors | Rule-based errors | Knowledge-based errors |

|---|---|---|---|

| Type of activity | Routine actions | Problem-solving activities | Problem-solving activities |

| Focus of attention | On something other than the task at hand | Directed at problem-related issues | Directed at problem-related issues |

| Control mode | Mainly by automatic processors (schemata) | Mainly by automatic processors (stored rules) | Limited, conscious processes |

| Predictability of error types | Largely predictable strong-but-wrong errors (actions) | Largely predictable strong-but-wrong errors (rules) | Variable |

| Ratio of error to opportunity for error | Though absolute numbers may be high, these constitute a small proportion of the total number of opportunities for error | Absolute numbers small, but opportunity ratio high | |

| Influence of situational factors | Low to moderate; intrinsic factors (frequency of prior use) likely to exert the dominant influence | Extrinsic factors likely to dominate | |

| Ease of detection | Detection usually fairly rapid an effective | Difficult and often only achieved through external intervention | |

| Relationship to change | Knowledge of change not accessed at proper time | When and how anticipated change will occur unknown | Changes not prepared for or anticipated |

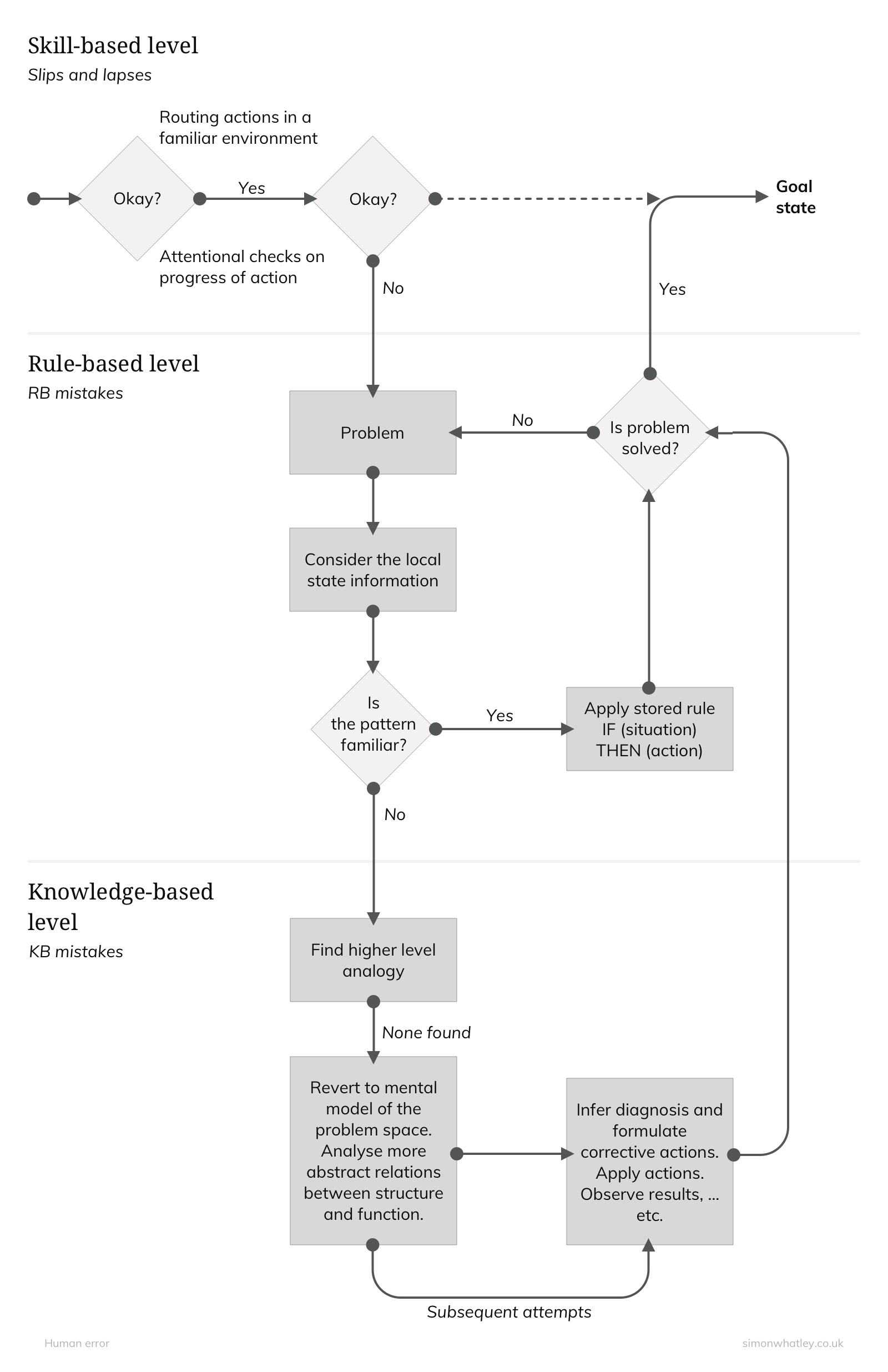

Operations divided into two areas:

- Those that precede the detection of a problem (the SB level).

- Those that follow it (the RB and KB levels).

Errors (slips and lapses) occurring prior to problem detection are seen as being mainly associated with monitoring failures, while those that appear subsequently (RB and KB mistakes) are subsumed under the general heading of problem-solving failures.

3.1. Monitoring failuresPermalink to section titled 3.1. Monitoring failures

Well-practised actions carried out by skilled individuals in familiar surroundings comprise segments of pre-programmed behavioural sequences interspersed with attentional checks upon progress. These checks involve bringing the higher levels of the cognitive system (the ‘workspace’) momentarily into the control loop in order to establish:

- Whether the actions are running according to plan; and

- More complexly, whether the plan is still adequate to achieve the desired outcome.

Slips and lapses involve inattention, omitting to make a necessary check. But a significant number of action slips are also due to overattention, making an attentional check at an inappropriate point in an automated action sequence.

Figure 1: Outlining the dynamics of the generic error-modelling system (GEMS).

3.2. Problem-solving failuresPermalink to section titled 3.2. Problem-solving failures

A problem can be defined as a situation that requires a revision of the currently instantiated programme of action.

The departures from routine demanded by these situations can range from relatively minor contingencies, swiftly dealt with by pre-established corrective procedures, to entirely novel circumstances, requiring new plans and strategies to be derived from first principles.

“Humans, if given a choice, would prefer to act as context-specific pattern recognisers rather than attempting to calculate or optimise.” Rouse, 1981.

GEMS asserts that when confronted with a problem, humans are strongly biased to search for and find a pre-packaged solution at the RB level before resorting to the far more effortful KB level, even where the latter is demanded at the outset.

Only when people become aware that successive cycling around this rule-based route is failing to offer a satisfactory solution will the move down to the KB level take place.

Human beings are pattern matchers. They are strong disposed to exploit the parallel and automatic operations of specialised, pre-established processing units: schemata, frames, scripts and memory organising packets. These knowledge structures are capable of simplifying the problem configuration by filling in the gaps left by missing or incomprehensible data on the basis of ‘default values’.

3.3. What determines switching between levels?Permalink to section titled 3.3. What determines switching between levels?

3.3.1. Between the SB and RB levelsPermalink to section titled 3.3.1. Between the SB and RB levels

The RB level is engaged when an attentional check upon progress detects a deviation from planned-for conditions.

If the deviation is minor and appropriate corrective rules are readily found, this phase will be terminated by a rapid return to the SB level.

3.3.2. Between the RB and KB levelsPermalink to section titled 3.3.2. Between the RB and KB levels

The switch from RB to the KB level occurs when the problem solver realises that none of his or her repertoire of rule-based solutions is adequate to cope with the problem.

Affective factors (subjective uncertainty and concern) are likely to play an important rôle.

Unconscious search for analogous problem-solving ‘packets’ will proceed in parallel with conscious ‘topographic’ reasoning.

3.3.3. Between the KB and SB levelsPermalink to section titled 3.3.3. Between the KB and SB levels

Activity at the KB level can be stopped by finding an adequate (or apparently so) solution to the problem. This will constitute a new plan of action requiring the execution of a fresh set of SB routines.

There will be rapid switching to and from the SB and KB levels until performance is back on some familiar track.

A plan of action represents a revised theory of the world and confirmation biases will lead to its continued retention, even in the face of contradictory evidence. Such ‘secondary errors’ may also be rendered more likely by the reduction in anxiety that accompanies the discovery of an apparent solution.

4. Failure modes at the skill-based levelPermalink to section titled 4. Failure modes at the skill-based level

4.1. Inattention (omitted checks)Permalink to section titled 4.1. Inattention (omitted checks)

4.1.1. Double-capture slipsPermalink to section titled 4.1.1. Double-capture slips

The greater part of the limited attentional resource is claimed either by some internal pre-occupation or by some external distractor at a time when a higher order intervention is needed to set the action along the currently intended pathway.

The necessary conditions for their occurrence:

- The performance of some well-practised activity in familiar surroundings.

- An intention to depart from custom.

- A departure point beyond which the ‘strengths’ of the associated action schemata are markedly different.

- Failure to make appropriate attentional checks.

The result is a strong habit intrusion.

4.1.2. Omissions associated with interruptionsPermalink to section titled 4.1.2. Omissions associated with interruptions

The failure to make the attentional check is compounded by some external event.

“I picked up my coat to go out when the phone rang. I answered it and then went out the front door without my coat.”

4.1.3. Reduced intentionalityPermalink to section titled 4.1.3. Reduced intentionality

Some delay intervenes between the formulation of an intention to do something and the time for this activity to be executed. This intention will probably become overlaid by other demands upon the conscious workspace.

Detached intentions – “I intended to close the window, but closed the cupboard door instead.”

Environmental capture – “I went into my bedroom intending to fetch my book. I took off my rings, looked in the mirror and came out again.”

What-am-I-doing-here experiences.

I-should-be-doing-something-but-I-can’t-remember-what experiences.

4.1.4. Perceptual confusionsPermalink to section titled 4.1.4. Perceptual confusions

Occur because the recognition schemata accept as a match for the proper object something that looks like it, is in the expected location or does a similar job.

Recognition schemata as well as action schemata become automatised to the extent that they accept rough rather than precise approximations of expected inputs.

4.1.5. Interference errors: blends and spoonerismsPermalink to section titled 4.1.5. Interference errors: blends and spoonerisms

Two currently active plans or, within a single plan, tow action elements, can become entangled in the struggle to gain control of the effectors.

“I had just finished a conversation on the telephone when my secretary ushered in some visitors. I got up from my desk to greet them and said ‘Smith speaking’.”

4.2. Over-attention: mistimed checksPermalink to section titled 4.2. Over-attention: mistimed checks

When an attentional check is omitted, the reins of action or perception are likely to be snatched by some contextually appropriate strong habit (action schema) or expected pattern (recognition schema).

Slips can also arise from exactly the opposite process when focal attention interrogates the progress of an action sequence at a time when control is best left to automatic ‘pilot’.

Omission – forgetting to switch on the kettle.

Repetition – pouring a second kettle of water into an already full teapot.

5. Failure modes at the rule-based levelPermalink to section titled 5. Failure modes at the rule-based level

In any given situation, a number of rules may compete for the right to represent the current state of the world. The system is extremely ‘parallel’ in that many rules may be active simultaneously.

Success in this race for instantiation depends upon:

- The rule should be matched either to salient features of the environment, or to the contents of some internally generated message.

- A rule’s competitiveness depends critically on its strength, the number of times a rule has been performed successfully in the past.

- The more specifically a rule describes the current situation, the more likely it is to win.

- Success depends upon a degree of support a competing rule receives from other rules.

Rule-based errors fall into two general categories:

- RB mistakes that arise from the misapplication of good rules

- RB mistakes that arise form the application of bad rules

5.1. The misapplication of good rulesPermalink to section titled 5.1. The misapplication of good rules

A ‘good rule’ is one with proven utility in a particular situation, but may be misapplied in environmental conditions that share some common features with these appropriate states, but also possess elements demanding a different set of actions.

Strong-but-wrong rules.

5.1.1. The first exceptionsPermalink to section titled 5.1.1. The first exceptions

The strong-but-now-wrong rule will be applied.

Example: checking your wing mirror before leaving your parking space, you see a red car. Doubling checking via your rear-view mirror you see a red car some distance away. Pulling out of the space, you nearly collide with a red car. There were two of them.

5.1.2. Signs, countersigns and non-signsPermalink to section titled 5.1.2. Signs, countersigns and non-signs

Situations that should invoke exceptions to a more general rule do not necessarily declare themselves in an unambiguous fashion, particularly in complex, dynamic, problem solving tasks.

Three kinds of information present:

- Signs – inputs that satisfy some or all of the conditional aspects of the appropriate rule.

- Countersigns – inputs that indicate that the more general rule is inapplicable.

- Non-signs – inputs which do not relate to any existing rule, but which constitute noise within the pattern recognition system.

All three types of input may be present simultaneously within a given informational array.

5.1.3. Information overloadPermalink to section titled 5.1.3. Information overload

The difficulty detecting countersigns is further compounded by the abundance of information confronting the problem solver in most real-life situations.

5.1.4. Rule strengthPermalink to section titled 5.1.4. Rule strength

The chance of a particular rule gaining victory in the ‘race’ to provide a description or a prediction for a given problem situation depends critically upon its previous ‘form’, or the number of times it has achieved a successful outcome in the past.

The cognitive system is biased to favour strong rather than weak rules whenever the matching conditions are less than perfect.

5.1.5. General rules are likely to be strongerPermalink to section titled 5.1.5. General rules are likely to be stronger

Situations matching higher-level rules will be stronger than those lower down by virtue of their greater frequency of encounter in the world.

5.1.6. RedundancyPermalink to section titled 5.1.6. Redundancy

Certain features of the environment will, with experience, become increasingly significant, while others will dwindle in their importance.

The acquisition of human skill is dependent upon the gradual appreciation of the redundancy present in the informational input.

Some cues will receive far more attention than others and this deployment bias will favour previously informative signs rather than the rarer countersigns.

5.1.7. RigidityPermalink to section titled 5.1.7. Rigidity

Rule usage is subject to ‘cognitive conservatism’.

There’s a strong and stubborn tendency towards applying the familiar but cumbersome solution when simpler, more elegant solutions are readily available.

If a rule has been employed successfully in the past, then there is an almost overwhelming tendency to apply it again, even though the circumstances no longer warrant its use.

5.1.8. General versus specific rulesPermalink to section titled 5.1.8. General versus specific rules

Where the individuating information is detected and where the ‘action’ consequences do not conflict with much stronger rules at a higher level, then people will operate at the more specific level.

5.2. The application of bad rulesPermalink to section titled 5.2. The application of bad rules

Two broad classes:

- Encoding deficiencies – features of a particular situation are either not encoded at all or are misrepresented in the conditional component of the rule.

- Action deficiencies – the action component yields unsuitable, inelegant or inadvisable responses.

5.2.1. A developmental perspectivePermalink to section titled 5.2.1. A developmental perspective

Three-stage process describing how children acquire adequate problem-solving routines (Karmiloff-Smith):

- Phase 1. Procedural Phase – at an early developmental stage, the behavioural output of the child is primarily data-driven.

- Phase 2. Meta-procedural Phase – behaviour is guided predominantly by rather rigid top-down knowledge structures (meaningful categories).

- Phase 3. Conceptual Phase – performance is guided by subtle control mechanisms that modulate the interaction between data-driven and top-down processing. A balance is struck between environmental feedback and rule-structures; neither predominates.

5.2.2. Encoding deficiencies in rulesPermalink to section titled 5.2.2. Encoding deficiencies in rules

Certain properties of the problem space are not encoded at all.

There are phases during the acquisition of complex skills when the cognitive demands of some component of the total activity screen out rule sets associated with other, equally important aspects.

Certain properties of the problem space may be encoded inaccurately.

The feedback necessary to disconfirm bad rules may be misconstrued or absent altogether.

‘Intuitive’ or ‘naïve’ physics – the erroneous beliefs people hold about the properties of the physical world.

An erroneous general rule may be protected by the existence of domain-specific exception rules

The problem solver encounters relatively few exceptions to the general rule, as in the case of the impetus-based assumptions of naïve physics.

5.2.3. Action deficiencies in rulesPermalink to section titled 5.2.3. Action deficiencies in rules

The action component of a problem-solving rule can be ‘bad’ in varying degrees.

Wrong rules – errors in subtraction sums arise not from the incorrect recall of numbers, but from applying incorrect strategies.

Inelegant or clumsy rules – many problems afford the possibility of multiple routes to a solution. Some of these are efficient, elegant and direct; others are clumsy, circuitous and occasionally bizarre. In a forgiving environment or in the absence of expert instruction, some of these inelegant solutions become established as part of the rule-based repertoire.

Inadvisable rules – the rule-based solution may be perfectly adequate to achieve its immediate goal most of the time, but its regular employment can lead, on occasions, to avoidable accidents. The behaviour is not wrong (in the sense that it generally achieves its objective, though it may violate established codes or operating procedures), it does not have to be clumsy or inelegant, nor does it fall into the ‘plain crazy’ category; it is, in the long run, simply inadvisable.

“If near-accidents usually involve an initial error followed by error recovery, more may be learned about the technique of successful error recovery than about how the original error might have been avoided.”

6. Failure modes at the knowledge-based levelPermalink to section titled 6. Failure modes at the knowledge-based level

Two basic sources:

- ‘Bounded rationality’ and

- An incomplete or inaccurate mental model of the problem space.

A ‘problem configuration’ is defined as the set of cues, indicators, signs, symptoms and calling conditions that are immediately available to the problem solver and upon which he or she works to find a solution.

Static configurations – problems in which the physical characteristics of the problem space remain fixed regardless of the activities of the problem solver.

Reactive-dynamic configurations – the problem configuration changes as a direct consequence of the problem solver’s actions.

Multiple-dynamic configurations – the configuration can change both as a result of the problem solver’s activities and, spontaneously, due to independent situational or system factors.

Different configurations require different strategies and, as a consequence, elicit different forms of problem-solving pathology.

6.1. SelectivityPermalink to section titled 6.1. Selectivity

An important source of reasoning errors lies in the selective processing of task information. Mistakes will occur if attention is given to the wrong features or not given to the right features. Accuracy of reasoning performance is critically dependent upon whether the problem solver’s attention is directed to the logically important rather than the psychologically salient aspects of the problem configuration.

6.2. Workspace limitationsPermalink to section titled 6.2. Workspace limitations

Reasoners at the KB level interpret the features of the problem configuration by fitting them into an integrated model.

The workspace operates by a ‘first in, first out’ principle.

6.3. Out-of-sight, out-of-mindPermalink to section titled 6.3. Out-of-sight, out-of-mind

The availability heuristic has two faces:

- One gives undue weight to facts that come readily to mind.

- The other ignores that which is not immediately present.

6.4. Confirmation biasPermalink to section titled 6.4. Confirmation bias

Conformation bias has its roots in effort after meaning. In the face of ambiguity, it rapidly favours one available interpretation and is then loath to part with it.

6.5. OverconfidencePermalink to section titled 6.5. Overconfidence

Problem solvers and planners tend to justify their chosen course of action by focusing on evidence that favours it and by disregarding contradictory signs. This is compounded by the confirmation bias exerted by a completed plan of action.

6.6. Biased reviewing: the ‘check-off’ illusionPermalink to section titled 6.6. Biased reviewing: the ‘check-off’ illusion

“Have I taken account of all possible factors bearing upon my choice of action?”

In retrospect, we fail to observe that the conscious workspace was, at any one moment, severely limited in its capacity and that its contents were rapidly changing fragments rather than systematic reviews of relevant material.

6.7. Illusory correlationPermalink to section titled 6.7. Illusory correlation

Problem solvers are poor at detecting many types of covariation.

6.8. Halo effectsPermalink to section titled 6.8. Halo effects

Problem solvers will show a predilection for single orderings and an aversion to discrepant orderings.

6.9. Problems with causalityPermalink to section titled 6.9. Problems with causality

Problem solvers tend to oversimplify causality. Because they are guided primarily by the stored recurrences of the past, they will be inclined to underestimate the irregularities of the future.

Causal analysis influenced by representativeness and the availability heuristics.

Problem solvers may also suffer from ‘creeping determinism’ or hindsight bias.

6.10. Problems with complexityPermalink to section titled 6.10. Problems with complexity

6.10.1. The Uppsala DESSY studiesPermalink to section titled 6.10.1. The Uppsala DESSY studies

Subjects fail to form any truly predictive model of the situation. Instead, they are primarily data-driven. This works well if feedback is immediate, but not if there is some form of delay.

6.10.2. The Bamberg Lohhausen studiesPermalink to section titled 6.10.2. The Bamberg Lohhausen studies

Mistakes divided into two groups:

- Primary mistakes made by almost all subjects

- Poor performance mistakes

Primary mistakes:

- Insufficient consideration of processes in time – subjects were more interested in the way things are now than in considering how they had developed over previous years.

- Difficulties in dealing with exponential development – subjects invariable underestimated their rate of change an were constantly surprised at their outcomes.

- Thinking in causal series instead of causal nets – subjects have a tendency to think in linear sequences. They are sensitive to the main effects of their actions upon the path to an immediate goal, but remain unaware of their side effects upon the remainder of the system.

Poor performance mistakes:

- Thematic vagabonding – “whenever subjects have difficulties dealing with a topic, they leave it alone, so that they don’t have to face their own helplessness more than necessary.”

- Encysting – mediated by bounded rationality, a poor self-assessment and a desire to escape from the evidence of ones own inadequacy.

Confirmation biases become more marked with the experience of failure. Subjects look for confirming rather than disconfirming evidence.

6.11. Problems of diagnosis in every day situationsPermalink to section titled 6.11. Problems of diagnosis in every day situations

The root of the problem in everyday diagnoses appears to be located in the complex interaction between two logical reasoning tasks:

- One serves to identify critical symptoms and the factual elements of the presented situation needing an explanation;

- The other is concerned with verifying whether the symptoms have been explained and whether the supplied situational factors are compatible with the favoured explanatory scenario.

References and further readingPermalink to section titled References and further reading

- Reason, J. (1990). Human Error. Cambridge University Press. https://doi.org/10.1017/CBO9781139062367

Updated on: 13 February 2021